Through a post on Hacker News, and via its cited article, I found myself transported back to the days when we wrote locking primitive implementations, tussling with the challenges of porting across many CPU architectures and operating systems. I worked on code like this in BerkeleyDB and also Netscape’s NSPR library, long long ago.

Somehow with today’s cloud platforms, languages where there aren’t even threads, and if you’re dealing with locking then well, you must be a dinosaur, I assumed nobody is working on code like this anymore. But they are it seems! Specifically Mike Burrows at Google (and originally DEC).

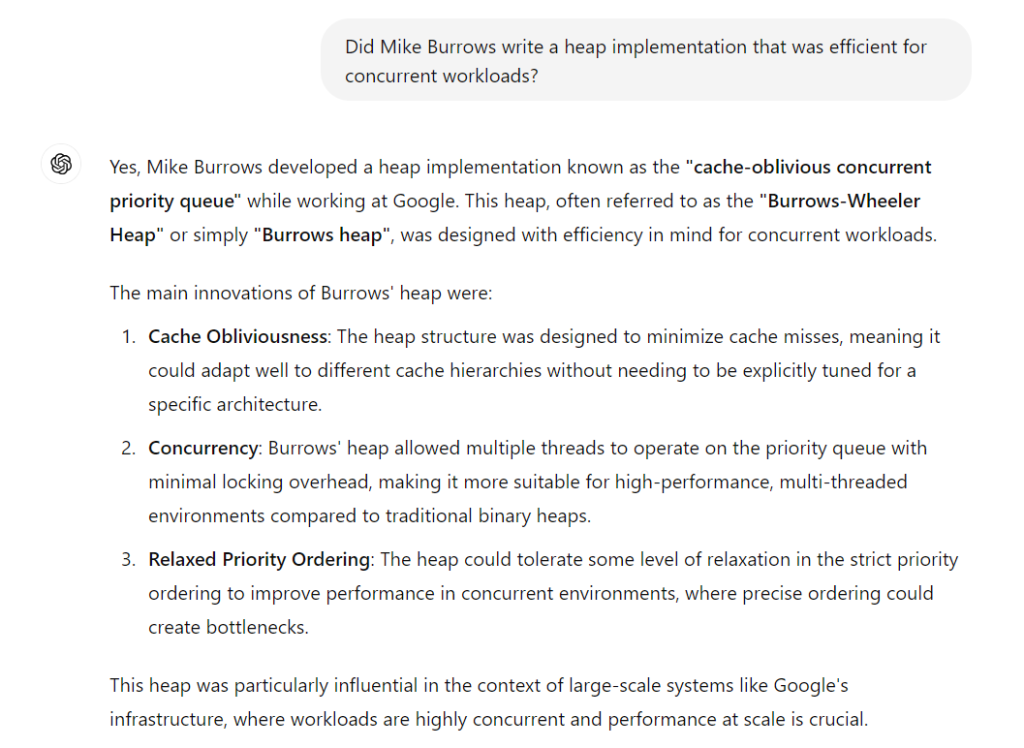

The name seemed familiar, and after some head scratching I convinced myself that we had used a heap (malloc) implementation he wrote that had very good performance for multithreaded applications. Since I wanted to be sure to get my facts straight for this article, I pitched a few queries into Google, assuming that it would find that references to that work. But it didn’t. Plenty of articles about his more recent work but nothing about concurrent heaps.

Since I’ve recently been digging into the workings of Large Language Models, I wondered how Chat-GPT would respond to the same query. Well, either it knew about Mike’s previous work, or it was able to imagine how it might have worked. Search engine: 0, LLM: 1 !

Recent Comments